Don’t miss out on the chance to join top executives in San Francisco on July 11-12 and learn how to integrate and optimize your AI investments for success! Learn More

Meta, formerly known as Facebook, has been at the forefront of AI for over a decade, using it to power its products and services such as News Feed, Facebook Ads, Messenger, and virtual reality. But as the demand for more advanced and scalable AI solutions grows, so does the need for more innovative and efficient AI infrastructure.

At the AI Infra @ Scale event, hosted by Meta’s engineering and infrastructure teams, top executives shared their insights and experiences on building and deploying AI systems at large scale. The event featured a series of new hardware and software projects announced by Meta that aim to support the next generation of AI applications.

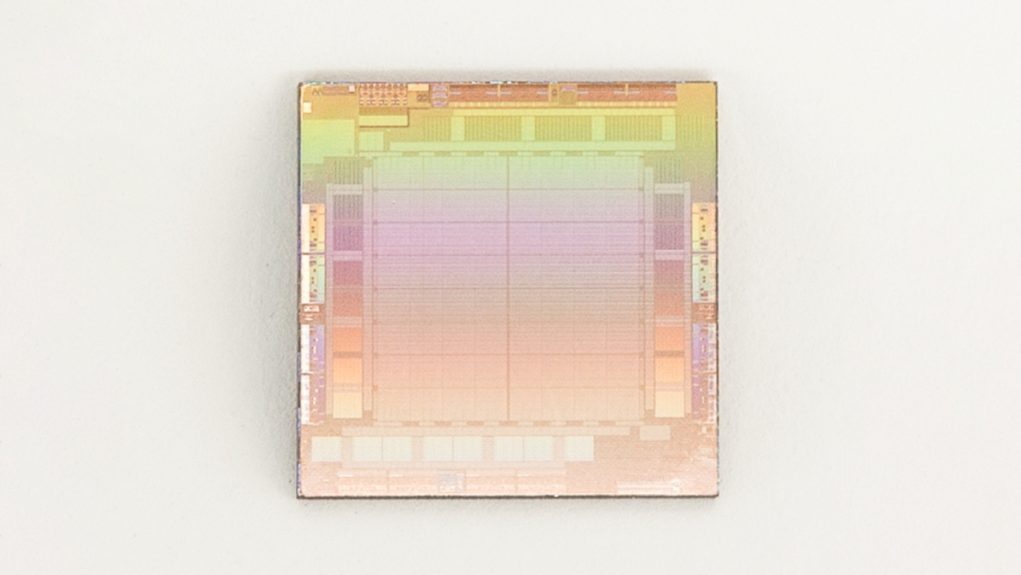

One of the announcements was a new AI data center design optimized for both AI training and inference, the two main phases of developing and running AI models. The new data centers will leverage Meta’s own silicon, the Meta training and inference accelerator (MTIA), a chip that will help to accelerate AI workloads across various domains such as computer vision, natural language processing, and recommendation systems.

Meta also revealed that it has already built the Research Supercluster (RSC), an AI supercomputer that integrates 16,000 GPUs to help train large language models (LLMs) like the LLaMA project, which Meta announced at the end of February.

Event

Transform 2023

Join us in San Francisco on July 11-12, where top executives will share how they have integrated and optimized AI investments for success and avoided common pitfalls.