Cerebras Systems, the AI accelerator pioneer, and UAE-based technology holding group G42, have unveiled the world’s largest supercomputer for AI training, named Condor Galaxy.

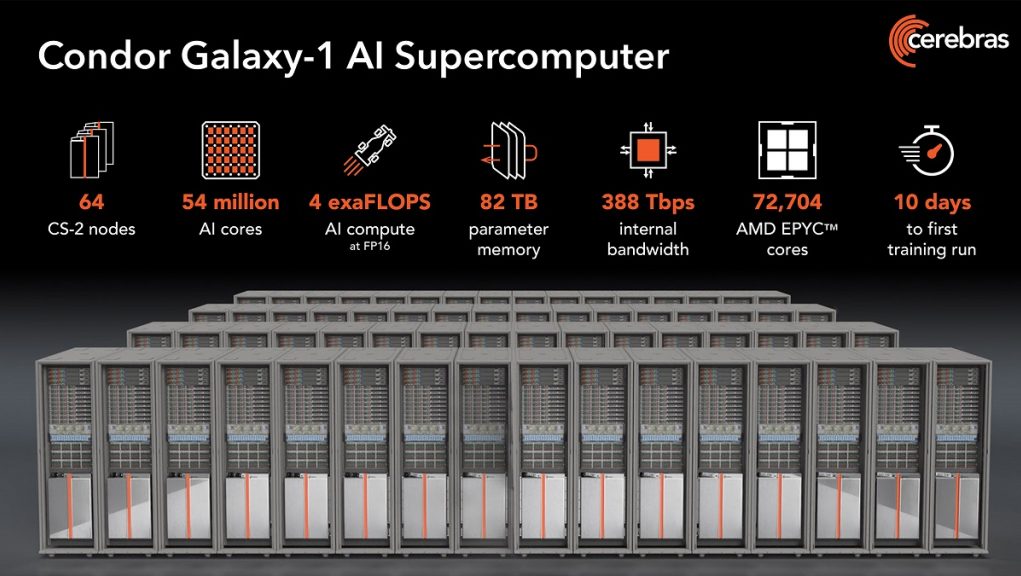

The network of nine interconnected supercomputers promises to reduce AI model training time significantly, with a total capacity of 36 exaFLOPs, thanks to the first AI supercomputer on the network, Condor Galaxy 1 (CG-1), which has 4 exaFLOPs and 54 million cores, said Andrew Feldman, CEO of Cerebras, in an interview with NeuralNation.

Rather than make individual chips for its centralized processing units (CPUs), Cerebras takes entire silicon wafers and prints its cores on the wafers, which are the size of pizza. These wafers have the equivalent of hundreds of chips on a single wafer, with many cores on each wafer. And that’s how they get to 54 million cores in a single supercomputer.

In our interview, Feldman said, “AI is not just eating the U.S. AI is eating the world. There’s an insatiable demand for compute. Models are proliferating. And data is the new gold. This is the foundation.”

International research and technology company Cerebras Systems have today revealed a groundbreaking innovation that heralds a new era of artificial intelligence (AI). The CS-1, their new AI training supercomputer, has set a new world record for the largest processor ever constructed, boasting 54 million processor cores— 8X the amount of cores of the largest mainstream processor currently available. Combined with its exotic latency-reducing memory and unique software architecture, this colossal device is specifically designed to push the boundaries of AI training set by traditional supercomputers.

Cerebras CEO, Andrew Feldman, was quoted as saying: “This processor is in a class of its own. The CS-1 will enable a new era in AI training and provide dramatically faster time-to-insight. With the CS-1, AI researchers and engineers will have access to the massive computing power and unique capabilities necessary to build new classes of AI applications that were previously out of reach with conventional computing technologies. Our focus is on the radical acceleration of AI training, allowing AI engineers to make faster progress towards discoveries that will help address the world’s most important challenges.”

At the center of the CS-1 is Cerebras’ custom-designed, 8.2-trillion-transistor processor, called the Wafer Scale Engine (WSE). Measuring 46,225mm²—46x larger than the next biggest processor—the WSE allows for unprecedented efficiency, compression, and speed when it comes to AI training. With 54 million cores and an on-package memory of 400GB, it is capable of providing over 10 petabytes per second of memory bandwidth, a significantly larger amount than traditionally available in other high-performance computing environments.

Much of the CS-1’s performance lies in its unique software architecture, designed by a team of experts in high-performance computing. This allows for rapid deep learning implementations with frameworks such as PyTorch, and TensorFlow, and for the training of models at scales not yet seen in AI research before.

The CS-1 is a breakthrough product that could open up an entirely new world of opportunity when it comes to AI development. With AI applications becoming increasingly commonplace, its capability of providing the amount of speed and power required for efficient training is an exciting step forward for the industry.