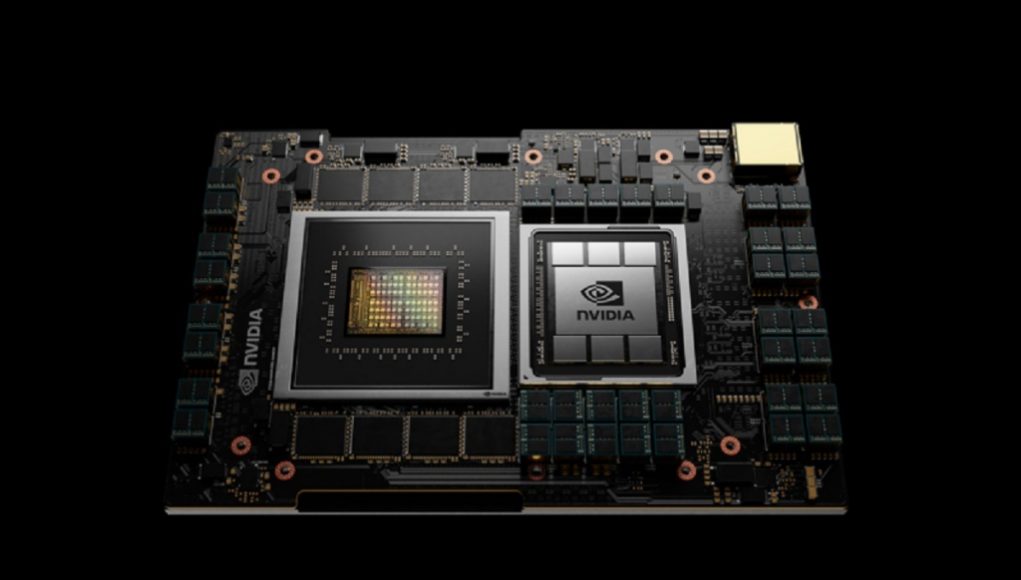

In exciting news for the world of AI, Nvidia has announced that the GH200 Grace Hopper Superchip is now in full production. This powerful chip is set to revolutionize the way we run complex AI programs, as well as high-performance computing (HPC) workloads. With over 400 system configurations based on Nvidia’s latest CPU and GPU architectures, including Nvidia Grace, Nvidia Hopper, and Nvidia Ada Lovelace, the GH200-powered systems are designed to meet the surging demand for generative AI.

At the recent Computex trade show in Taiwan, Nvidia CEO Jensen Huang revealed new systems, partners, and additional details surrounding the GH200 Grace Hopper Superchip. By bringing together the Arm-based Nvidia Grace CPU and Hopper GPU architectures using Nvidia NVLink-C2C interconnect technology, the chip delivers up to 900GB/s total bandwidth, providing incredible compute capability to address the most demanding generative AI and HPC applications.

But that’s not all. Nvidia AI Enterprise, the software layer of the Nvidia AI platform, offers over 100 frameworks, pretrained models, and development tools to streamline development and deployment of production AI, including generative AI, computer vision, and speech AI. And with systems featuring GH200 Superchips expected to be available later this year, the future of AI is looking brighter than ever.

To meet the diverse accelerated computing needs of data centers, Nvidia has also unveiled the Nvidia MGX server specification. This modular reference architecture provides system manufacturers with the ability to quickly and cost-effectively build over 100 server variations to suit a wide range of AI, high-performance computing, and Omniverse applications. With ASRock Rack, ASUS, GIGABYTE, Pegatron, QCT, and Supermicro all set to adopt MGX, the future of enterprise AI is looking brighter than ever.Nvidia has unveiled its latest offering, the Multi-Instance GPU (MGX), which allows users to select their GPU, DPU, and CPU. The MGX is designed to cater to unique workloads such as data science, edge computing, graphics and video, enterprise AI, and design and simulation. The best part? Multiple tasks like AI training and 5G can be handled on a single machine, while upgrades to future hardware generations can be frictionless. The MGX can also be easily integrated into cloud and enterprise data centers. QCT and Supermicro will be the first to market, with MGX designs appearing in August. SoftBank also plans to roll out multiple hyperscale data centers across Japan and use MGX to dynamically allocate GPU resources between generative AI and 5G applications.

But that’s not all. Nvidia also announced the Nvidia DGX GH200 AI Supercomputer, a new class of large-memory AI supercomputer that enables the development of giant, next-generation models for generative AI language applications, recommender systems, and data analytics workloads. The DGX GH200’s shared memory space uses NVLink interconnect technology with the NVLink Switch System to combine 256 GH200 Superchips, allowing them to perform as a single GPU. This provides 1 exaflop of performance and 144 terabytes of shared memory, nearly 500x more memory than in a single Nvidia DGX A100 system.

The DGX GH200 architecture provides 10 times more bandwidth than the previous generation, delivering the power of a massive AI supercomputer with the simplicity of programming a single GPU. Google Cloud, Meta, and Microsoft are among the first expected to gain access to the DGX GH200 to explore its capabilities for generative AI workloads. Nvidia also intends to provide the DGX GH200 design as a blueprint to cloud service providers and other hyperscalers so they can further customize it for their infrastructure.

Lastly, Nvidia announced that a new supercomputer called Nvidia Taipei-1 will bring more accelerated computing resources to Asia to advance the development of AI and industrial metaverse applications. Taipei-1 will expand the reach of the Nvidia DGX Cloud AI supercomputing service into the region with 64 DGX H100 AI supercomputers. The system will also include 64 Nvidia OVX systems to accelerate local research and development, and Nvidia networking to power efficient accelerated computing at any scale. Owned and operated by Nvidia, the system is expected to come online later this year.”Get ready to style your webpage like a pro! With just a few simple tweaks to the CSS code, you can make your website stand out from the crowd. Don’t be afraid to experiment with different font sizes and styles – just remember to keep the HTML tags unchanged. So what are you waiting for? Let’s get started and make your website look amazing!”

Nvidia, one of the world’s leading artificial intelligence and computing firms, recently announced that their latest supercomputer chip, the Grace Hopper Superchip, is now in full production. Designed for use in generative artificial intelligence (AI) applications, the new chip promises to revolutionize the field of AI by providing unprecedented performance and capabilities.

The Grace Hopper Superchip is the result of a collaboration between Nvidia and Cray Inc., the well-known supercomputer manufacturer. The chip is based on Nvidia’s DGX SuperPOD architecture, and is equipped with 8,000 AI processors, 8 terabytes of RAM, and 6 Nvidia A100 Tensor Core GPUs. It has been designed to deliver the highest performance possible, while also providing an energy-efficient solution for AI developers.

The Grace Hopper Superchip will enable AI developers to train larger and more complex models, drive faster insights, and power large-scale AI inferencing workloads. In addition, it supports a variety of software frameworks, including TensorFlow, PyTorch, and RAPIDS, allowing for increased productivity and faster results.

For AI researchers and developers, the Grace Hopper Superchip presents an opportunity to push the boundaries of what is possible with AI. By providing unprecedented performance, the chip will enable AI researchers to create new and improved models and algorithms, and to develop groundbreaking AI applications.

The Grace Hopper Superchip is the latest development from Nvidia, and serves as a testament to the firm’s commitment to advancing the field of AI. It promises to greatly enhance the capabilities and performance of generative AI applications, and will no doubt be a game-changer in the AI space.